Aided Target Recognition using Imprecise and Uncertain Data

Target detection is a paramount area of research in the field of remote sensing which aims to locate an object or region of interest while suppressing unrelated objects and information (Geng 2017, Chaudhuri 1995, Zare 2018). Traditional supervised learning approaches require extensive amounts of highly precise, sample-level or pixel-level groundtruth to guide algorithmic training. However, acquiring large quantities of accurately labeled training data can be expensive, both in terms of time and resources, or may even be unattainable.

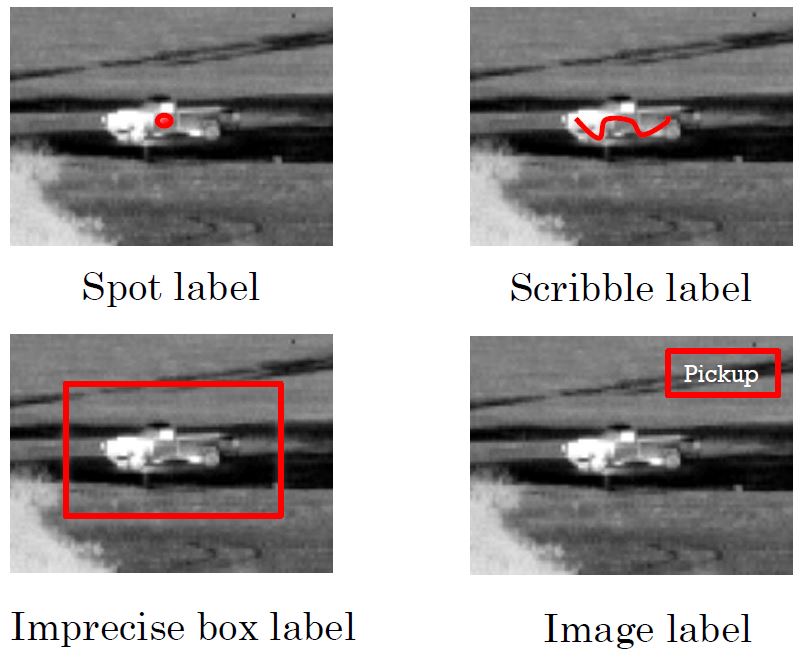

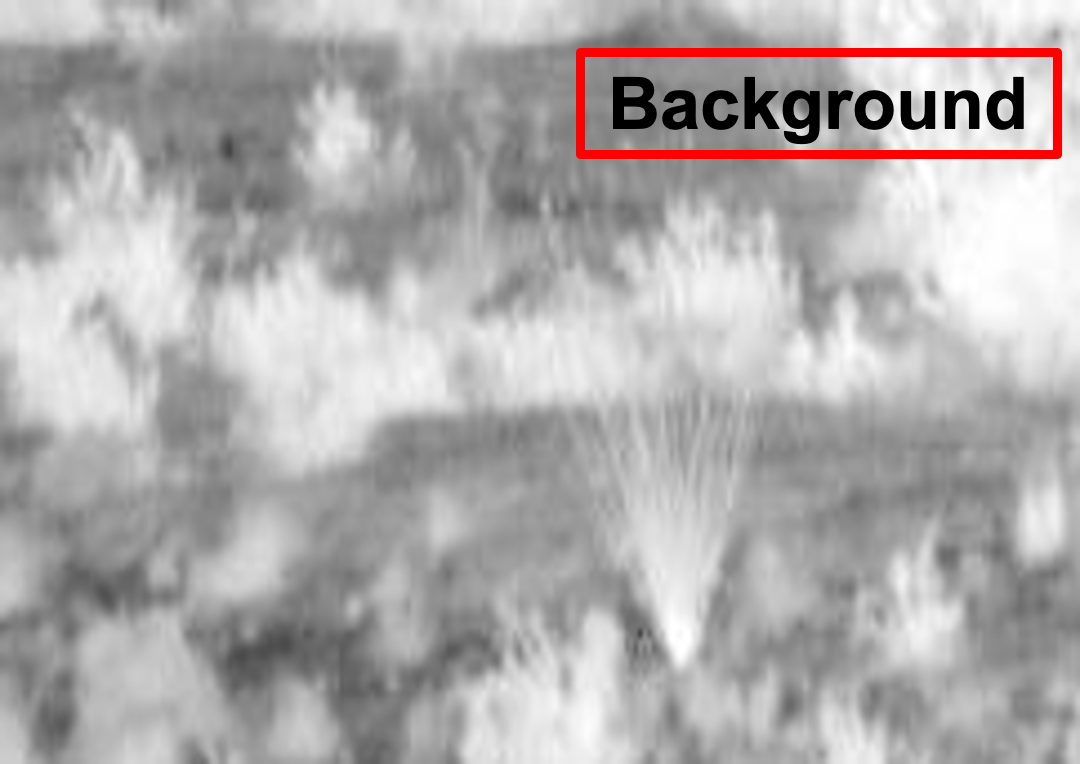

The figures in this post show military and civilian vehicles captured by mid-wave infrared (MWIR) sensors. It is conceivable that an algorithm could be designed to detect if vehicles are present in the imagery, as well as denote which classes the vehicles belong to. In fact, many computer vision algorithms have already been developed to perform target detection using exact, pixel-level segmentation masks and tight, canonical bounding boxes (Figure 1). Yet, pixel-level groundtruth are often subjective and drawing a tight box around every training target in a dataset is extremely tedious, taking teams of annotators thousands of hours to complete. A practical workaround to strenuous labeling is to simply use imprecise or weak labels. Imprecise labels can be considered as annotations which are not incorrect, but less accurate than traditional labels used in supervised learning. Examples of imprecise labels include: relaxed bounding boxes (Figure 1), spot labels (Figure 2), and scribble annotations (Figure 2). As shown in Figure 3, images can even be annotated at a high level as including or excluding target pixels.

While the benefits of imprecise labels are apparent, current approaches in the literature which learn from weakly annotated data are drastically outperformed by their strictly supervised counterparts in pixel-level target detection tasks. Thus, our current efforts are focused on improving the mappings from weak annotations to pixel-level segmentations. Presently, we are exploring the use of bag-level manifold information to promote instance discriminability under the weak learning paradigm of multiple instance learning. In contrast to traditional target detection approaches, our work learns from weakly-labeled groundtruth.

References

-

A. Zare, C. Jiao and T. Glenn, “Discriminative Multiple Instance Hyperspectral Target Characterization” in IEEE Trans. Pattern Anal. Mach. Inteli. vol. 40, num 10, pp 2342-2354. Oct. 2018.

-

X. Geng, L. Ji and Y. Zhao, “The basic equation for target detection in remote sensing,” in CoRR. 2017.

-

B. Chaudhuri and S. Parui, “Target Detection: Remote Sensing Techniques for Defence Applications,” in Defence Science Journal. vol 45, pp 285-291. Apr. 1995.